Monte Carlo vs. Anomalo: Broad Observability vs. Deep Anomaly Detection

January 27, 2026

As we navigate 2026, the stakes for data integrity have never been higher. Gartner predicts at least 30% of generative AI projects will be abandoned after the proof-of-concept phase due to poor data quality, escalating costs, and unclear business value. While the previous era was defined by “data observability” to ensure data stack plumbing worked reliably, the AI era demands something more: data trust built on a foundation of deep, content-level comprehension.

Many organizations find themselves at a crossroads between two distinct philosophies for fixing broken data foundations. Understanding the fundamental differences between these two is critical for any leader tasked with turning data into a competitive advantage.

Two Distinct Approaches to Data Trust

The history of Monte Carlo and Anomalo represent two unique perspectives on the challenges facing organizations today and how to address them. While both emerged around the same time, their founding purposes led them down different paths.

Monte Carlo coined the term “data observability” to mirror software observability (DevOps). Their goal was to eliminate “data downtime”, when data is partial, erroneous, missing, or otherwise inaccurate. They focused on the data engineer, building a “guard” to monitor pipeline health, freshness, and schema changes.

Anomalo was founded to enable deep data comprehension and identify “unknown unknowns.” The founders believed that manual rules were unsustainable at scale. They built an AI-first platform specifically to help data scientists and analysts trust the content of their data without having to write thousands of validation rules.

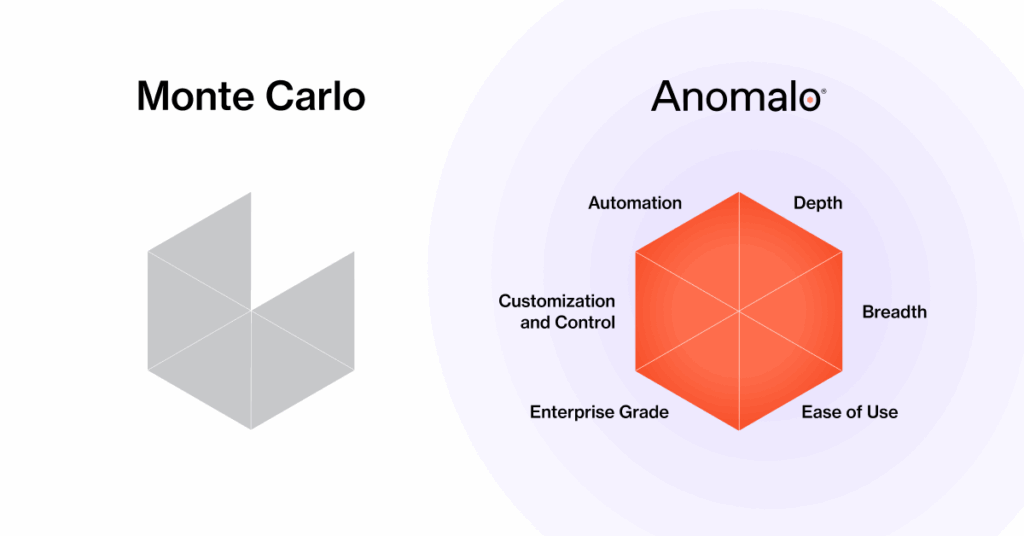

Different Lens, Different Perspective

Monte Carlo’s “observability” approach focuses on metadata checks that look at the surface level for hints of issues. This approach is necessary for pipeline reliability, but surface-level checks miss abnormal values, hidden correlations, and subtle distribution shifts. It trades depth for breadth.

Anomalo’s “data trust” approach ensures that data is trustworthy at the point of decision, from reports to custom LLMs. To accomplish this, Anomalo emphasizes deep understanding of the data itself. Anomalo’s approach hunts for and discovers issues observability misses by analyzing the actual contents of the data. This approach builds confidence in the foundational data for AI deployments.

Comparing the Two Approaches

Monte Carlo’s DataOps-first approach centers on broad observability and may be right for you if:

- You need comprehensive pipeline and infrastructure monitoring: You want a single platform to track FinOps (query performance and cost alerts), pipeline reliability (Gantt views of query runtimes), and standard DataOps signals like freshness and schema breaks.

- You require broad, metadata-level lineage: You have a complex modern data stack and need field-level lineage that correlates alerts across warehouses, lakes, and BI tools to visualize “impact radius”.

- You are scaling AI agent initiatives via engineering: You want an Agentic Observability layer designed to help engineers triage AI application failures and monitor model input/output drift using “LLM-as-a-judge” evaluation techniques.

Anomalo’s analyst-first approach uses AI to provide deep data trust and may be right for you if:

- You need to trust the content, not just the delivery: You’ve had instances where the pipeline was “on time” (green), but the data inside was wrong (e.g., a currency conversion error, a 0 value in a critical field, or a subtle distribution shift that skewed an AI model).

- You are scaling AI or regulated analytics: You are building RAG applications or LLMs and need to monitor both structured and unstructured data for quality, bias, and PII to ensure your AI isn’t “thinking” based on flawed inputs.

- You want to democratize data quality: You want to move away from the engineering bottleneck. You need a platform where business analysts and product owners can use natural language with AIDA to self-serve their own data quality checks and root-cause analysis.

Customer Results

Before we dive deeper into this comparison, let’s look at some customer results to gauge the effectiveness of Anomalo’s “data trust” approach:

- ADP went from 700 manual checks to 16,000+ machine learning-powered validations

- Afterpay (Block) analysts address 30% more data issues in a self-service capacity

- Named a Strong Performer in the 2025 Gartner® Peer Insights™ “Voice of the Customer” for Augmented Data Quality Solutions, Anomalo earned a 95% willingness to recommend.

The Six Pillars of Data Quality without Compromise

In the legacy era of data management, organizations were forced to choose between rigor and agility. You could have deep, manual validation that was impossible to scale, or you could have broad, surface-level monitoring that missed the subtle shifts that actually break models. Data quality without compromise was introduced to identify trade-offs and bridge the gap between technical engineering requirements and the high-stakes needs of data scientists and business analysts.

Six strategic pillars redefine how an enterprise achieves data trust. At its core, it is about moving beyond “observability”, which simply tells you if a pipeline is running, to deep content trust. This means finding unanticipated data quality issues, “unknown unknowns”, that metadata signals miss, and extending that same level of rigor to the unstructured data that fuels today’s Generative AI initiatives.

These pillars shift the center of gravity from the data engineer to the data consumer. By democratizing ownership through an analyst-first UI and generative AI assistants to ensure that those who understand the business context of the data are the ones empowered to monitor and trust it. This isn’t just about reducing engineering toil; it’s about creating a scalable culture of data governance that can actually keep pace with the speed of AI.

Below is a side-by-side comparison of Monte Carlo and Anomalo using the six pillars of data quality without compromise.

| Pillar | Monte Carlo | Anomalo |

| Depth of Data Understanding | Surface-Level Metadata. Primarily uses AI/ML to suggest ranges for data quality rules and to correlate metadata checks (freshness, volume, schema) which miss abnormal values. | Deep Content Analysis AND Contextual Accuracy. Leverages AI-native, deep anomaly detection by inspecting the actual contents of the data. Anomalo’s unsupervised ML learns data patterns and flags the subtle distribution shifts, abnormal values, and hidden correlations that metadata signals miss.

Zalando ensures trustworthy data for 30+ teams to make timely, reliable, and accurate decisions across fast-moving ecommerce operations. |

| Automated Anomaly Detection | AI-Assisted Rules or Automated Thresholds. Limits detection to anticipated issues. | AI-Native Automation AND Uncover Unknowns. Uses UML to automatically catch >85% of issues without manual configuration.

Discover found that traditional rules-based approaches would require 5 million SME hours. They chose Anomalo because it eliminates manual rule creation through AI-native automation |

| Comprehensive Data Coverage | Tables-Only. Limited to structured tables and tables with unstructured text columns, ignoring documents, logs, and PDFs required for AI. | Structured AND Unstructured Coverage. Monitors all data types including unstructured content for bias and PII.

Nationwide partnered with Anomalo specifically to expand data quality monitoring beyond structured rows and columns into unstructured assets like claims notes and contracts. |

| Ease of Use | Engineer-Centric UI. Perpetuates bottlenecks; keeps quality gated behind technical teams. | Data Analyst-First UI and Natural Language Access. AIDA democratizes monitoring, triage, and RCA for self-service.

Lebara saw their time spent on data quality issues drop from 70% to less than 30% of the data team’s workload, helping them save over 5,000 person-hours annually. |

| Customization and Control | Basic Custom Checks. Supports custom validation, but primary focus is on pipeline health. | Rigor AND Flexibility. Allows technical users to layer on custom SQL rules and specify key business metrics with alerting based on business criticality.

Kingfisher noted that customized data quality checks monitor specific scenarios that matter to the business. |

| Enterprise-Grade Security and Scale | Enterprise Security. Meets strict auditability and data residency requirements. | Enterprise Security AND Analyst-Scale Trust. Meets strict auditability and data residency requirements AND supports rapid, compliant self-service adoption across the enterprise.

Anomalo is chosen by Fortune 500 leaders like Block and Discover for the ability to handle petabyte-scale deployments under the strictest compliance mandates. |

Conclusion

The choice between Monte Carlo and Anomalo isn’t just a choice between tools; it is a choice between two distinct philosophies.

If your primary goal is broad observability in one tool (e.g., pipelines, costs, infrastructure, AI agents) to reduce the manual toil of your data engineering team, a pipeline-centric observability approach may be where you start. But in the era of Generative AI and automated decision-making, simply having broad, surface-level checks for your data + AI estate are no longer enough.

True data and AI trust requires deep data quality without compromise. It requires the depth to see inside your data, both structured and unstructured content, and the ability to democratize quality to empower every analyst and data scientist to own the integrity of their work. By pivoting from “healthy platforms” to healthy data, you take the first step towards organization-wide data and AI trust.

Ready to lead the shift?

Start building deep data trust for AI. Don’t let your AI initiatives become a Gartner statistic.

Request a demo today and see how Anomalo’s data quality without compromise makes your data, your teams, and your AI ready for the future.

Categories

- Data Governance

- Unstructured Data

Ready to Trust Your Data? Let’s Get Started

Meet with our team to see how Anomalo transforms data quality from a challenge into a competitive edge.